There are several possible ways of improving this tracker. Indeed, the tracker actually does not use time information. The fact that two consecutive frames look similar helps in the computation complexity of the tracker. The location in the second frame can be found by looking for the face in a sub-region of the image, centered in the middle of the previously detected face. The size of this sub-region should be larger than the size of the face.

We used an hysteresis cycle to include this sub-region search in the tracker. The algorithm works as follow :

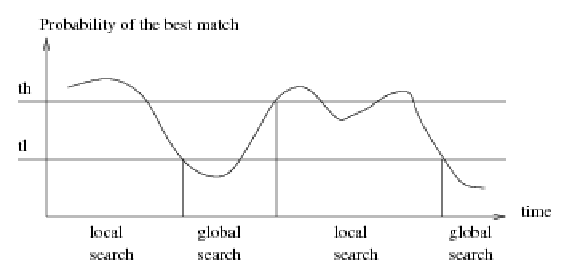

So, the tracker has two modes : a global search and a local search. It swaps between one mode and the other according to the value of the best match found in the current frame. Figure 4.3 shows how the hysteresis cycle works in our case.

|

Experiments have shown that a factor ![]() was a good choice. If

was a good choice. If ![]() is too small, the local search often fails, and the global search has to found the eyes. This results in a lost of speed. If

is too small, the local search often fails, and the global search has to found the eyes. This results in a lost of speed. If ![]() is too big, then the bounding box used to look for eyes is too big so the search is slower and the possibility of false positive increases. So the choice of

is too big, then the bounding box used to look for eyes is too big so the search is slower and the possibility of false positive increases. So the choice of ![]() is important.

is important.

Unfortunately, the algorithm actually relies on constant that have to be determined manually. Indeed, ![]() and

and ![]() depends on a lot of parameters such as the lighting conditions or the distance between the face and the camera. It would be nice to be able to automatically find the size of the bounding box that should be used. A model of the motion of face acting in similar conditions may provide a good estimate of this bounding box.

depends on a lot of parameters such as the lighting conditions or the distance between the face and the camera. It would be nice to be able to automatically find the size of the bounding box that should be used. A model of the motion of face acting in similar conditions may provide a good estimate of this bounding box.

Another improvement can be done by choosing good clustering algorithm for the detection of the eyes. An early try of support vector machine (SVM) [11] on eyes data extracted from the training set has been done. The result seems to suggest that the detection can be improved a lot using this clustering method.