Bobick and Davis [6] are using a combination of motion energy image and motion history image to store templates of gestures in a database. The motion energy image describes the distribution of motion by summing the square of thresholded differences between successive pairs of images [5]. This gives blob likes images describing the areas where motion has been observed. The motion history image is a image template where the pixels represents the recency of the movement at the corresponding position. This images represents how a gesture is performed. These two images forms a vector that describes a gesture. The gestures are then discriminated using Hu moments on the motion energy image. These moments and other geometric features form the parameters of the motion energy image [5]. This is specific to their application and can be done using other methods for different applications. The recognition is done by comparing a gesture with the stored parameters using Mahalanobis distance. If this distance is less than a threshold, the similarity between motion history images is used to make the decision. If several gestures are selected, the one closest to the motion history image stored in the database is chosen. This approach has been extended in [19] by using a hierarchical motion history image instead of a fixed size one in order to improve recognition properties.

Jebara and Pentland [36] propose an Action-Reaction Learning (ARL) in order to synthesize human behaviour. After using the color classification [35], blobs corresponding to the position and orientation of the head and hands of a user are extracted using an expectation maximization algorithm. A Kalman filter is used to improve the tracking of the blobs. Time series approach is used to map past to future. A window is chosen in the past that represent the 128 last frames (about 6.5 seconds of video). This gives a series of vectors of dimension 3840, that is 15 parameters for the blobs by 2 individuals by 128 frames. The dimensionality of these vectors are then reduced using a principle component analysis to form a 40 parameter vector. The most recent vector and the most recent velocity are then concatenated to this vector to form the input vector of the system. This input vector encodes the past action of each frame. The corresponding output vector is given by the 30 dimensional vector representing the current frame. The conditional expectation maximization algorithm [37] is then used to find the probability of the location and direction of the blobs given the compressed past vector. After having observed the interaction between two users, the system is the able to display blobs that interact in a meaningful sense with a single user.

Brand and Kettnaker [8] introduce a new framework to learn hidden Markov models. Instead of the conventional Baum-Welch algorithm based on expectation maximization, their algorithm is based on entropy minimization. In practice, the M-step of the expectation maximization algorithm is modified to minimize the entropy of the data, the entropy of the model distribution and the cross-entropy between the expected statistic of the data and the model distribution instead of maximizing the likelihood. This method gives deterministic annealing within the expectation maximization framework and turns it into a quasi-global optimizer. It has been tested on the learning of the activity of an office. Contrary to the conventional way of learning hidden Markov model, this algorithm gives a learned model with meaningful hidden states such as : enter/exit activity, whiteboard writing, use of the phone or use of the computer. The transition matrix obtained is sparse and thus shows that the structure of the scene has been discovered. It has also been shown that it was more successful in detecting abnormal behavior.

Black and Jepson [4] are using the CONDENSATION algorithm to recognize signs written on a white board in order to control a computer. A training set of manually aligned signs are used to compute a model for each sign. Each model represents the mean trajectory of signs that represent the same action. A set of states is defined and represents the model used and the scale used to fit to the template stored for this model. When a new sign is presented to the system, probabilities of matching signs in the model database are computed by a chosen probability measure. A current state is chosen using the computed probabilities. The next state is then predicted from the current state by diffusion and sampling, as it is done in the CONDENSATION algorithm. If likelihood of this state is too small, the previous step is tried again up to a fixed number of tries. If the likelihood is still too small, a random state is chosen. A set of new weighted states are generated using this method by choosing different current weighted states. The gestures are then recognized using these weights.

Magee and Boyle [43] built a behaviour classifier called History Space Classifier. This classifier is based on Gaussian mixtures in cluster an history space. The history space is constructed by a succession of a spacial and a temporal operator. The spacial operator is the selection of the nearest prototype using a winner take all approach. The temporal operator used is simply a multiplication by a weight which is one over the time spent since the prototype has last been selected [41]. A more complex temporal operator could be used such as a set of leaky neurons as it has been done by Sumpter and Bulpitt [55]. A modified version of the expectation maximization introduced by Cootes and Taylor [16] is then used to model the point distribution function (pdf). In order to decrease the computational load of the algorithm, a principle component analysis is applied before the expectation maximization algorithm so that the size of the data set is reduced. The resulting pdf is then used to predict the next state in the sequence.

Magee and Boyle [42] are using cyclic hidden Markov models in order in conjunction with Black and Jepson's variant of the CONDENSATION algorithm. Their aim is to recognize cows having trouble to walk. Two cyclic hidden Markov models are used to model the cyclic motion of cows walking. One learns a typical behaviour of a healthy cow and the other one learns a lame behaviour. The cyclic hidden Markov models are integrated in the re-sampling CONDENSATION framework so that the model that describe a test behaviour more accurately dominates over time. The decision is then based on the number of samples produced for each model.

Fleet et al. [27] are using optical flow to learn motion in a sequence of images. A principle composent analysis is used on data obtained from optical flow algorithm to find the first order components of the velocity fields. It has been tried on the modelling of lips motion of a talking head. The first seven components found explained 91.4% of the variance. They have used this result to build a simple user interface controlled by the head and the mouth. The mouth gives orders such as "track", "release" or "print" and the head is used like a joystick in order to scroll when in tracking mode.

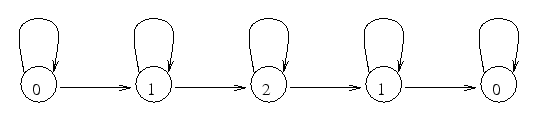

Hong, Turk and Huang [30] propose to recognize gestures of a user interacting with a computer by using finite state machines in order to model the gestures. User's head and hands are first tracked by the algorithm proposed by Yang et al. [58]. The spatial data are represented by states that are computed using dynamic ![]() -means. The Mahalanobis distance is used for the clustering. During the clustering, each time the improvement is small, the state with the largest variance is split into two state if the variance is larger than a previously chosen threshold. Therefore, the number of clusters increases until each cluster has a variance smaller than the threshold. The sequences of clusters during training gestures are then entered manually. The range of time we can stay in a state is then associated with each state. For instance figure 2.1 represent a sequence like {0,1,1,1,1,2,2,2,2,2,2,2,2,1,1,0} and the user can stay between 2 and 3 cycles in the state 1 during the recognition phase. The recognition of the gesture is done by simply following the links in the finite state machine. If the hand is close to the centroid of a state during a right number of cycles, then we go to the next state. If two gestures are recognized at the same time, the gesture which was closest to the centroids of the clusters is chosen.

-means. The Mahalanobis distance is used for the clustering. During the clustering, each time the improvement is small, the state with the largest variance is split into two state if the variance is larger than a previously chosen threshold. Therefore, the number of clusters increases until each cluster has a variance smaller than the threshold. The sequences of clusters during training gestures are then entered manually. The range of time we can stay in a state is then associated with each state. For instance figure 2.1 represent a sequence like {0,1,1,1,1,2,2,2,2,2,2,2,2,1,1,0} and the user can stay between 2 and 3 cycles in the state 1 during the recognition phase. The recognition of the gesture is done by simply following the links in the finite state machine. If the hand is close to the centroid of a state during a right number of cycles, then we go to the next state. If two gestures are recognized at the same time, the gesture which was closest to the centroids of the clusters is chosen.

|

Bregler [10] uses a hierarchical framework to recognize human dynamics in video sequences of persons running. The framework can be decomposed into four steps : the raw sequence, a model of movements by mixture of gaussians, a model of linear dynamics and a model of complex movements. The basic movements are modeled with a mixture of gaussians using the expectation maximization algorithm on a probabilistic estimation of the motion based on the gradient of the raw sequence. The gaussians are then grouped into blobs. Each blob is tracked by a Kalman filter. The Kalman filter is chosen to model a movement with constant velocity because we do not know the specific motion of each blobs. A cyclic hidden Markov model is then used to model the high level complexity of motion. The hidden Markov model is trained with a iterative procedure that require an initial guess of the model parameters. This guess is obtained by dynamical programming and is fine-tuned by the procedure.

Brand et al. [9] claim that classical hidden Markov model are not good at modelling correctly interactions. Indeed the Markov assumptions used to construct the models are false for most interaction data. It doesn't encode time properly. He investigated a new model called coupled hidden Markov model (see figure 2.2(c)) were a state does not depend only on the previous state but on several previous states that are intuitively modelling the state of each actor of the interaction. He also derived a efficient learning algorithm to train these coupled hidden Markov models [7]. He compared the classical hidden Markov model (see figure 2.2(a)), linked hidden Markov models introduced by Saul and Jordan [53], that model balance between actors of an interaction (see figure 2.2(b)), and coupled hidden Markov models (see figure 2.2(c)). The data set used was a set of Thai Chi gestures. It has been shown that coupled hidden Markov models outperforms the two other models. Linked hidden Markov models was generally better than classical hidden Markov models expect for particular moves where the dynamics of the gesture could not be capture correctly.

![\begin{figure}\begin{center}

\subfigure[Classical HMM]{

\epsfbox{hmm.eps}

}

\sub...

...m.eps}

}

\subfigure[Coupled HMM]{

\epsfbox{chmm.eps}

}

\end{center}

\end{figure}](img6.png) |

Galata, Johnson and Hogg [28] split trajectories into prototypes in order to model movement. The movements are then learned using a variable length Markov model. As this approach seems to be the state of the art in behaviour modelling, we studied the components of this approach, in particular the variable length Markov model (see section 5). The prototype clustering is done using a ![]() -means like algorithm described in section 4.3. The sequence of prototypes is then learned using the variable length Markov model. The advantage of this approach is that it can model join behaviour of people [38]. This is an essential characteristic for building a realistic human-computer interface.

-means like algorithm described in section 4.3. The sequence of prototypes is then learned using the variable length Markov model. The advantage of this approach is that it can model join behaviour of people [38]. This is an essential characteristic for building a realistic human-computer interface.