|

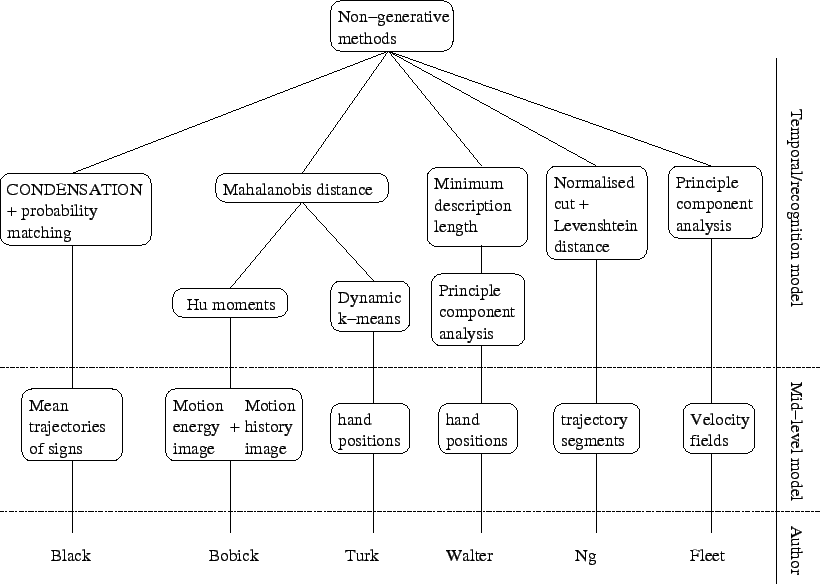

A classification of the non-generative methods can be found in figure 2.2. The methods are usually used for recognition of behaviour. However, a new sequence that represents this behaviour cannot be generated from the models directly.

Fleet et al. [32] use optical flow to learn motion in a sequence of images. A principle component analysis is used on data obtained from an optical flow algorithm to find the first order components of the velocity fields. It has been tried on the modelling of lips motion of a talking head. The first seven components found explained 91.4% of the variance. They used this result to build a simple user interface controlled by the head and the mouth. The mouth gives orders such as ``track'', ``release'' or ``print'' and the head is used like a joystick in order to scroll when in tracking mode.

Black and Jepson [7] use the particle filtering methods to recognise signs written on a white board in order to control a computer. A training set of manually aligned signs is used to compute a model for each sign. Each model represents the mean trajectory of signs that encode the same action. A set of states is defined and represent the model used and the scale used to fit to the template stored for the model. When a new sign is presented to the system, probabilities of matching signs in the model database are computed by a chosen probability measure. A current state is chosen using the computed probabilities. The next state is then predicted from the current state by diffusion and sampling, as it is done in particle filtering algorithms. If likelihood of this state is too small, the previous step is tried again up to a fixed number of tries. If the likelihood is still too small, a random state is chosen. A set of new weighted states are generated using this method by choosing different current weighted states. The gestures are then recognised using these weights.

Bobick and Davis [10] use a combination of motion energy image and motion history image to store templates of gestures in a database. The motion energy image describes the distribution of motion by summing the square of thresholded differences between successive pairs of images [9]. This gives blob-like images describing the areas where motion has been observed. The motion history image is an image template where the pixels represent the recency of the movement at the corresponding position. This image represents how a gesture is performed. The motion energy image and the motion history image form a vector that describes a gesture. The gestures are then discriminated using Hu moments on the motion energy image. These moments and other geometric features form the parameters of the motion energy image [9]. This is specific to their application and can be done using other methods for different applications. The recognition is performed by comparing a gesture with the stored parameters using Mahalanobis distance. If this distance is less than a threshold, the similarity between motion history images is used to make the decision. If several gestures are selected, the one closest to the motion history image stored in the database is chosen. This approach has been extended in [26] by using a hierarchical motion history image instead of a fixed size one in order to improve recognition properties.

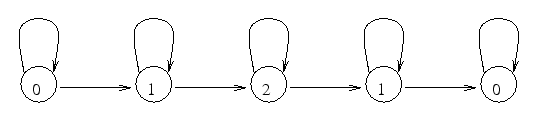

Hong, Turk and Huang [42] recognise gestures of a user interacting with a computer by using finite state machines in order to model those gestures. The user's head and hands are first tracked by the algorithm proposed by Yang et al. [99]. The spacial data are represented by states that are computed using dynamic ![]() -means. The Mahalanobis distance is used for the clustering. During the clustering, each time the improvement is small, the state with the largest variance is split into two states if the variance is larger than a previously chosen threshold. Therefore, the number of clusters increases until each cluster has a variance smaller than the threshold. The sequences of clusters during training gestures are then entered manually. The range of time we can stay in a state is then associated with each state. For instance figure 2.3 represents a sequence like {0,1,1,1,1,2,2,2,2,2,2,2,2,1,1,0} and the user can stay between 2 and 3 cycles in the state 1 during the recognition phase. The recognition of the gesture is done by simply following the links in the finite state machine. If the hand is close to the centroid of a state during a particular number of cycles, then the algorithm moves to the next state. If two gestures are recognised at the same time, the gesture which was closest to the centroids of the clusters is chosen.

-means. The Mahalanobis distance is used for the clustering. During the clustering, each time the improvement is small, the state with the largest variance is split into two states if the variance is larger than a previously chosen threshold. Therefore, the number of clusters increases until each cluster has a variance smaller than the threshold. The sequences of clusters during training gestures are then entered manually. The range of time we can stay in a state is then associated with each state. For instance figure 2.3 represents a sequence like {0,1,1,1,1,2,2,2,2,2,2,2,2,1,1,0} and the user can stay between 2 and 3 cycles in the state 1 during the recognition phase. The recognition of the gesture is done by simply following the links in the finite state machine. If the hand is close to the centroid of a state during a particular number of cycles, then the algorithm moves to the next state. If two gestures are recognised at the same time, the gesture which was closest to the centroids of the clusters is chosen.

|

In [95], Walter et al. model gestures by groups of trajectory segments. The trajectory segments are extracted by detecting discontinuities in the gesture trajectory. After normalising the trajectory segments, their dimensions are reduced using a principle component analysis. Clusters are then extracted from the component space using an iterative algorithm based on minimum description length. The clusters form atomic gesture components. There is a parallel between groups of trajectory segments and the actions or visual units we want to extract from the video sequence. However our segmentation and grouping algorithms are both different.

In [71], Ng and Gong use another algorithm to group trajectory segments. They use the Levenshtein distance to compare two trajectory segments. This distance is based on the dynamic time warping algorithm and a reinterpolation of the trajectory segments. An affinity matrix is constructed by comparing the segments two by two. Their unsupervised version of the normalised cut algorithm is then used on this affinity matrix to cluster the trajectory segments. An optimal threshold of the normalised cut algorithm is found maximising the intra-cluster affinity while minimising the inter-cluster affinity.