|

In order to learn a correct mapping with a Pattern Associator, it is better to have a linearly separable mapping to learn. It is also better if we use a bias to give more mapping possibilities to the Pattern Associator. As the camera is not looking at a fixed direction anymore, we need to select better features. The histogram is not a good feature anymore because it does not contain a lot of information about the direction of the camera. Trying to find the angles between lines and a fixed direction in the picture is a much better idea. These angles seem to change in the same way as the angle between the camera and the direction of the corridor does. That is why a Hough transform was used to process images with that kind of motor control.

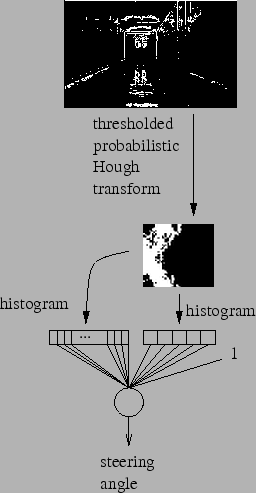

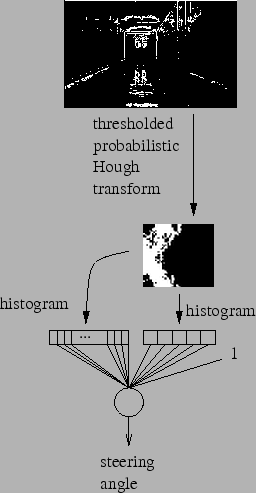

Figure 3.8 shows the process used to find the steering angle to use. Each image grabbed by the robot is preprocessed by the edge detector in order to compute the Hough space of the image, using the probabilistic Hough transform. Then, both vertical and horizontal histograms of the Hough space are computed. As positions of the lines in the image is less important than angles in our case, the thickness of the stripes are different for the vertical and the horizontal histogram. 45 stripes were used for the histogram which correspond to the angles, and only 5 stripes were used for the histogram which correspond to the distance of the detected lines to the center of the image.

|

Furthermore, I used the joystick to teach the robot. In the training sequence, each time an image is grabbed, the steering is checked in order to get a corresponding steering angle. As the joystick overrides the program for the control of the motors, the steering angle can be changed via the joystick. During the testing stage, the joystick is not plugged in anymore, and the steering angle is controlled by the program.